3/4/2024

A New Frontier in How We Hear

A New Frontier In How We Hear

How Grainger Engineers are working to give the world super human hearing and improve technology for the hard of hearing.

Disclaimer: This article is an immersive experience with flashing video, audio, and moving images throughout. There will be automatic video which you can pause at anytime in the bottom corner of each video. For the best experience, we recommend you turn your audio on at a mild level volume before starting the article. When ready, click the 'Start Here' button to begin.

A New Frontier in How We Hear

Take a moment and listen to the world around you. The soft pitter patter of rain against a roof. The bright, crisp crunch of autumn leaves underfoot. The slightly distorted notes of music from a downtown restaurant fighting to be heard amongst car engines. For many people, these are the accents in the song of everyday life. Sharp, soft, clear and subtle, all are familiar to the human ear.

But just because the human ear can hear doesn’t mean the sound is understandable.

For example, imagine being out at a restaurant with your friend. The music is loud and dozens of conversations are happening around you. Your friend says something, but it’s impossible to understand them over the cacophony of the house music and fellow diners.

But what if you could hear them simply by tuning out everything else around you? No, not by ignoring everything, but by actually turning off the sound of the environment save for what you want to hear?

It’s actually what a group of researchers are working on in the Illinois Augmented Listening Laboratory at the University of Illinois Urbana Champaign’s Grainger College of Engineering.

Using 3D printers, microphones, acoustics, and a team of dedicated graduate students led by two professors (Andrew Singer and Ryan Corey), this lab wants to lay the groundwork to give society superhuman hearing. They call it augmented listening.

So, how are they going to do that?

Follow me.

Tuning in and Tuning Out

Now before you start thinking you can one day hear like Superman, let’s take a look at what superhuman means. One definition is ‘exceeding normal human power, size, or capability.’ Think more like Batman instead of Superman: he has both the tools and ingenuity to bring his ideas to life. But there’s no cape and cowl here. Rather, it’s the latest example of how Grainger Engineers are making advances in medical engineering and making the probable possible in healthcare innovations.

Giving people superhuman hearing is all about the tools. In this case, microphones.

“Microphones are prevalent in today’s society,” says Kanad Sarkar, ECE graduate student. “At the same time there are a variety of noisy environments which can interfere with a good conversation. We foresee a world where these microphones can actively aid in-person conversations!”

Believe it or not, there’s a good chance you already have a similar device that would be key to making this concept a reality. You might even be wearing them right now.

“We have the technology for this type of work to be conducted in the form of our AirPods and our phones. They’re cheaper now than ever and they’ll just continue to get cheaper,” says Sarkar.

Apple’s AirPods aren’t technically hearing aids but they do have the ability to amplify sound. Even the National Council on Aging released an article on how the devices could revolutionize hearing support for people of all ages.

“They allow you to load an audiogram onto your phone and personalize the output sound,” explains Manan Mittal, ECE graduate student and team lead of the Augmented Listening Laboratory located in the Coordinated Science Lab. “They also scan your ears for a personalized spatial sound experience.”

It’s the kind of technology – accessible, easy, and even trendy – that researchers were waiting for. With the first hurdle cleared, the next step is to create technology that can be utilized as an actual conversation enhancer.

This leads us to the crux of the issue: ‘The Cocktail Party Problem.’

“The Cocktail Party Problem is where you have numerous talkers in a room, and you’d like to separate them or enhance them,” says Mittal. “You want to be able to distinguish one talker from another. When we try to reproduce the spatial cues for a particular listener, we call it listening enhancement.”

Remember the scenario where you and your friend are in a restaurant filled with other diners and loud music? This is the modern-day version of the Cocktail Party Problem. Researchers want to give people the ability to enhance the sound coming from the people and things they want to hear while simultaneously decreasing background noise.

“The idea of the research can go far beyond what just hearing aids can do. It can be desirable to enhance talkers spatially. I want to choose locations in the room where people sound realistic to me and all other sound in the room is removed,” says Mittal.

This entire concept could also have a multipurpose use. Mittal adds, “We’d like to be able to enhance hearing aid algorithms to the point that someone with severe hearing loss is able to use these algorithms and benefit from them.”

It’s a benefit for both the hearing impaired and non-disabled. Improved technology for all. This is the future that researchers want to achieve. But to get there requires not just research, but also some very specialized equipment, including a ‘talking head.’

SCROLL

Printing Possibilities

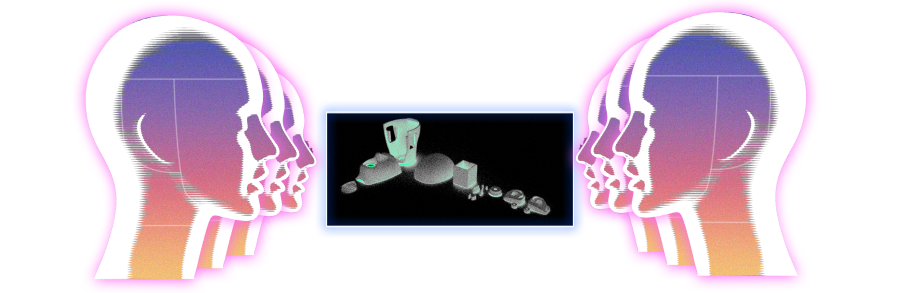

A talking head is pretty much how you would imagine it to be. Well, kind of.

These heads don’t talk in the traditional sense with vocal cords and a moving jaw. Instead, they use a speaker. They ‘listen’ too. But instead of listening with ear drums, they have microphones.

Using 3D printers, researchers can build the necessary components to create a replica of a human head with space for those necessary upgrades. At roughly $50 to $60 per head, it’s a steal compared to the $30,000 commercial grade units created to do the same thing.

“Many researchers cannot afford one, let alone multiple commercial head and torso simulators,” explains Mittal. “So, this project presents a path for researchers to collect large datasets with binaural cues and multiple active talkers and listeners. At the very least, we think it is a step in the right direction.”

Plus, they’re smaller, which makes them convenient for experiments.

“Our latest design lets us move the heads around without worry,” says Austin Lu, ECE graduate student. “They are inexpensive and robust, which makes it less risky to do big ambitious experiments that simulate lots of people or lots of motion.”

The heads can move on their own via silent, multi-axis turrets, similar to how a human neck would move.

You may be asking yourself why not just use humans for this task? There are plenty of those around, especially on a college campus. The answer: inconsistency.

“I could run an experiment with people, but we’d be here for hours, and it’s not reproducible,” says Sarkar. “We want to have replicable data. We want to be able to have time to investigate the differences between simulation and real-life acoustics. Ideally, the process is automated. It makes things easier for us.”

It also makes research more accurate. Other research groups would place microphones a head distance apart from each other to mimic people in a conversation. But that method didn’t account for the physical mass that surrounds our inner ear and how sound arrives there. Nor did it account for the situation where a listener in a conversation is more than likely a speaker, too.

The first version of the head was designed in 2020 by an industrial design student out of wood. Pieces were cut, sculpted, and then glued together to form an artisanal model of a human head with room for a speaker and microphones. It’s gone through multiple iterations since then. As the group explored how to roboticize their experiments, they decided to switch to 3D printed, biodegradable plastic heads via an open-source blueprint. Not only did this make it easy to produce more heads to experiment with, but it also meant they could be created relatively quickly.

But with innovation came growing pains.

“Once we got the printers to work, it was hard nailing down a model that actually was easy to construct,” says Mittal. “It looks nice when it’s completed, but it took a lot of time to even have it stay assembled.”

These heads aren’t printed as a complete piece. Instead, they’re a modular design with the intention of being able to pull them apart and replace pieces as necessary. However, the heads seemed to take the ability to ‘come apart’ a bit too literally.

“We had a problem for a while where they would kind of ‘explode,’” says Lu. “Many of the constituent parts wouldn’t interlock well, and everything was just too fragile for actual use.”

The modular design also has an additional benefit, especially since the material they are working with is plastic and the design naturally has voids inside.

“By opening the head up, we can swap out parts with different materials, add acoustic dampening, and more. This allows us to work around the underlying problem: that plastics have very different acoustics from skin and bone,” explains Lu. “It works surprisingly well.”

All of this adds up to a relatively inexpensive, accessible, and modifiable tool that can be used for experimentation: all characteristics that could make it attractive to other researchers studying similar topics.

“We hope that researchers around the world are able to use this tool to develop better algorithms for hearing aids, assistive listening systems, or in general to enhance the listening experience of current audio products,” says Mittal.

One aspect of the heads that you might not realize is extremely important: ears. Why create so many different types of ears that can attach and reattach to these heads?

It turns out our ears, cartilage, earlobes and all, are a pretty big deal in how we hear.

SCROLL

All About Ears

Let’s get back to basics.

Ears obviously play an important part in our hearing. But just how do people hear? The simple answer is that sound waves travel through the ear canal, causing your eardrum to vibrate. Three tiny bones right behind the eardrum amplify the vibrations and send them to a part of your inner ear called the cochlea, which is filled with fluid. The fluid inside ripples, and that sends an electrical signal along the auditory nerve to your brain, which tells you what the sound was.

Now here’s something you probably didn’t expect: Ear shape also comes into play for exactly how we perceive sounds and where those sounds come from. Think of them as small satellite dishes that take in information all around you.

"We can tell just from listening whether something is in front of us or behind, to the left or to the right,” says Lu. "You get this really good localization ability with only your two ears."

Their shape and position also affect how each person perceives sounds around them. Even though it’s very slight, Sarkar says there are differences between how two people can hear the same thing.

"It’ll be relatively negligible. It’s not like a person with big ears won’t be able to perceive something, but there’s going to be slight differences just like with how we perceive light as well."

According to the National Institute of Health, the average human can hear a range of 20 Hz to 20,000 Hz (20 kHz). But from person to person, there will be slight intricacies when it comes to acoustics in perceiving sounds. (We’ll touch more on this later!) The team also explained that a person’s torso shape also affects how sound reaches us. For most people, this doesn’t seem like a big deal. But these intricacies are very important in this line of research. This is why the team also designs and prints different ears to experiment. The cost to do so? Under $3.

But it’s not always as simple as designing a template and printing.

"Getting the tolerancing right is a struggle. We want the ears to fit snugly on the heads, but we are working with different filaments and somewhat dated 3D printers,” says Lu.

Beyond the ability to control the printing process, there are benefits to 3D printing the ears, including taking advantage of the lightweight material while reducing the likelihood of breakage. Intricate details also aren’t an issue for 3D printing, and the team says when it comes to the human head, ears are about as intricate as it can get. It’s even possible to 3D scan people’s ears for more accurate replication.

But design and theory are only part of this puzzle. Remember that line in parentheses several paragraphs ago? Let’s take a deeper look at that.

SCROLL

Finding the Right Frequency

3D printed heads? Check.

Interchangeable Ears? Check.

The desire to improve human hearing above and beyond? Check.

The base components are here, but there is still another check needed for this research. A different kind of check, really. You could call it a mic check.

“Acoustics is, in part, nature. We’re trying to understand nature and harness it,” says Sarkar. “At the end of the day, we’re taking from what’s already understood from a physics perspective.”

Owls have asymmetrical ears actually allowing them to hear more precisely than humans with symmetrical ears since sound hits one ear before the other.

Whales can hear up to 12 distinct octaves under the ocean as opposed to 8 for humans which helps them be alert for danger and for food.

The Pistol Shrimp can make sounds louder than a real pistol or a lion’s roar. How? By having a part of it’s bigger claw act as a "hammer" to harness energy, once it’s released--BAM! The craziest part? It’s only as long as your finger!

Acoustics is a branch of physics concerned with the properties of sound including the vibrations in the air that allow humans and animals to hear. To improve hearing, researchers are leveraging acoustical properties through microphones, algorithms, machine learning, and an understanding of the concept called binaural cues.

Sound reaches your ears at slightly different times depending on where the sound is coming from. A person speaking to you from your left would sound louder to your left ear versus your right. This can go a step further with head-related transfer functions (HRTF). It’s how we can hear where noise is coming from, whether it’s to the left, right, in front, or behind us. Your HRTF, like your ear shape, is also unique to you.

With that in mind, how does the team plan on improving people’s hearing if everyone both perceives sound differently and has a unique HRTF? Collect a large sampling of HRTF for general use.

These databases already exist. If you’ve played a video game, there’s a chance you’re familiar with this concept without realizing it. Immersive audio is essential for giving gamers a competitive edge. Games are now releasing with the option to utilize HRTF over stereo, like Valorant. Even consoles like the PlayStation 5 now offer HRTF, but you might recognize it being marketed as 3D sound.

“We’ve tried hearing through each other’s HRTFs. It’s really awful. Suddenly you can’t hear from front to back, nor up from down,” says Lu. “It shows why the personal ear shape matters, why it’s good that we can pop those ears out and just print a new one in under an hour.”

Microphones play a bigger part in this research than just being placed inside the heads to act as ear drums. They’re also used throughout rooms where research is being performed. Believe it or not, this also helps train algorithms to improve hearing.

A microphone array is a system that uses multiple microphones together to capture audio for a number of uses, including acoustic source localization, environmental noise monitoring, and more. For their experiments, the team has set up their own version of microphone arrays to catch sound. Using signal processing, it’s possible to isolate a specific sound such as a person talking. This is used along with the heads to pinpoint talkers in a simulated conversation. How? Similar to how you use your cell phone to get onto Wi-Fi or Bluetooth, the idea is that a wearable device could link up to a microphone array system to enhance hearing or filter out sound at that location.

This is currently possible in the real world in some locations. For example, there are classrooms and conference rooms that already have large microphone arrays installed in them that could be used for this purpose.

“I may not have 50 mics [to use for an experiment], but I can go into a building that could have all the facilities there for a specific room to have full audio control,” says Sarkar. “What this will do is give super hearing in an enclosed environment.”

There are already phone apps that amplify what your phone’s microphone is picking up and can be used with headphones. It’s not far-fetched to think a microphone array system could one day be common in society. Many buildings and businesses already have Wi-Fi for public use, but it wasn’t commonplace until the public started using their cell phones everywhere. The team is hopeful the same phenomena will happen here, making superhuman hearing a reality.

As for that restaurant?

“When I walk in, the augmented listening system connects to my hearing device and teaches it to enhance sound in a bubble around me,” says Mittal. “The idea is to be able to incorporate it in situations where you need it, just like you’d use a microscope or telescope.”

It’s more than fair to say all of this requires a significant amount of science and engineering. Those are elements you can quantify: the numbers plotted out for measurement and analysis. What you can’t quantify is the sheer amount of heart and soul this requires, too.

SCROLL

The Heart Behind the Plastic

On the eastern side of the northernmost quad of the University of Illinois Urbana-Champaign campus sits the Coordinated Science Laboratory. If you climb down the stairs to the basement, turn right, and walk down a long hallway, you’ll find where the researchers of the Augmented Listening Laboratory call home.

Behind a heavy-duty metal door is a small space with more than enough room for computer and sound equipment, robotics, plastic heads, rigging, and other necessary research items either stacked on shelves or placed on the floor. The walls on one end of the room are covered in sound dampening material, while the rest is peppered with research posters of previous lab work.

This room isn’t just where research and analysis happens. It’s also where creativity, ingenuity, and the encouragement to explore is continuously fostered.

“Having environments where people get to explore different things is a luxury,” says Sarkar. “We came in, and we were blessed to have advisors who went out of their way to cultivate creativity, especially for undergraduate research opportunities.”

Austin Lu

Kanad Sarkar

Manan Mittal

That freedom to explore turned Mittal, Sarkar, and Lu from undergraduate students taking a class in technology entrepreneurship into graduate students who are both helping steer the direction of research and teaching the very classes they once sat in. The work of one of their advisors, ECE Adjunct Research Assistant Professor Ryan Corey, has become both their work and mission.

“This research is about human beings. That has been the target audience since its conception,” says Mittal. “This research aims to impact society the same way most human-centric research eventually hopes to impact society: by bringing a positive change to the daily experience of the target audience.”

Mittal and Sarkar joined the lab five years ago as sophomores. They were first employed by Corey when he was working on his doctorate thesis under the tutelage of ECE Professor Andrew Singer, the founder of the Augmented Listening Laboratory. A hearing aid user since childhood, Corey focused his work on augmenting human listening and hearing aid platforms. One of his projects with Singer was the concept of using wearable microphone arrays to improve audio data sets, which has inspired the work currently done in the lab. Corey eventually became a co-faculty advisor of the lab with Singer while also becoming an assistant professor at the University of Illinois Chicago.

Andrew Singer

Ryan Corey

The team explains that research allows students to explore and discover not only their interests, but also whether or not they even enjoy research at all. They acknowledge that the ability to jump into undergraduate research help led them to this lab. It’s what they want for other students, too.

“We don’t know what is going to happen after us, but we know that it will continue,” says Sarkar. “We don’t know who the next group of researchers will be, but we do have continual interest from undergrads.”

This research won’t just continue. It’s also expanding.

Mittal is transferring to Stony Brook University in New York where Singer is now the Dean of the College of Engineering and Applied Sciences to complete his graduate degree. Mittal plans to continue this project there while Sarkar and Lu continue research at the UIUC lab under the guidance of Corey at UIC.

The move, while planned, is bittersweet.

“I was thinking about this very recently that it is actually terrible to leave this space,” says Mittal. “It’s where I decided that I want to do research with my life. We got to see our own students grow, which is an incredible opportunity as a graduate student.”

The lab may be the home of this research, but home isn’t just four walls. It’s friends and family, relationships this lab has created over years between the researchers.

“A professor here once told me, ‘Be careful who you sit in the same room with. You might be friends forever’,” says Mittal.

SCROLL

Keep Listening

So, what’s next?

Research continues at the Augmented Listening Lab within the Grainger College of Engineering at the University of Illinois Urbana-Champaign. The team has new printers and a commercial grade head and torso dummy for acoustic research, although they still plan to 3D print heads and ears.

A twin lab already exists at the University of Illinois Chicago with Corey. The team is looking forward to the third lab being set up at Stony Brook University.

Three labs. One goal.

For the moment, superhuman hearing is a dream.

But how long until it becomes a reality?

We’ll just have to keep our ears open, now won’t we?

Acknowledgments

This mulitimedia piece was produced by members of the Grainger College of Engineering Marketing and Communications Team.

Written and Voiced by Lauren Laws

Graphics/Video/Animations by Nicholas Morse and Callie Clinch

Web Assistance and Coding by Josh Potts

Additional Web Assistance by Laura Hayden

Special thanks to Manan Mittal, Kanad Sarkar, and Austin Lu for their time. It has been a pleasure, and we wish you success on your endeavors!

If you would like to learn more about the Augmented Listening Laboratory and their work, you can find additonal information through the links below.

- Innovation in Augmented Listening Technology

- Prof. Ryan Corey’s Profile

- Prof. Corey’s Website at UIC

- Prof. Andrew Singer’s Website

- Prof. Singer’s Profile at Stonybrook